Continuous Open Source Integration

Challenges with continuous open source integration

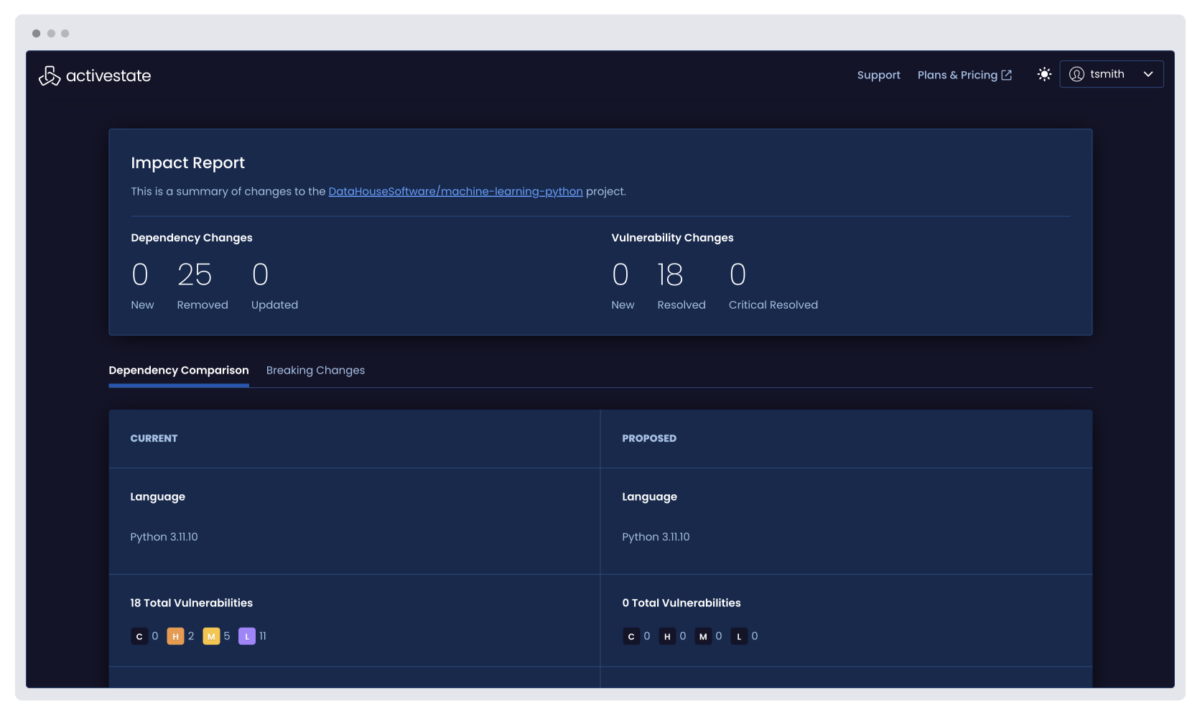

Updating open source dependencies is risky and time-consuming, leaving many teams stuck on vulnerable versions.

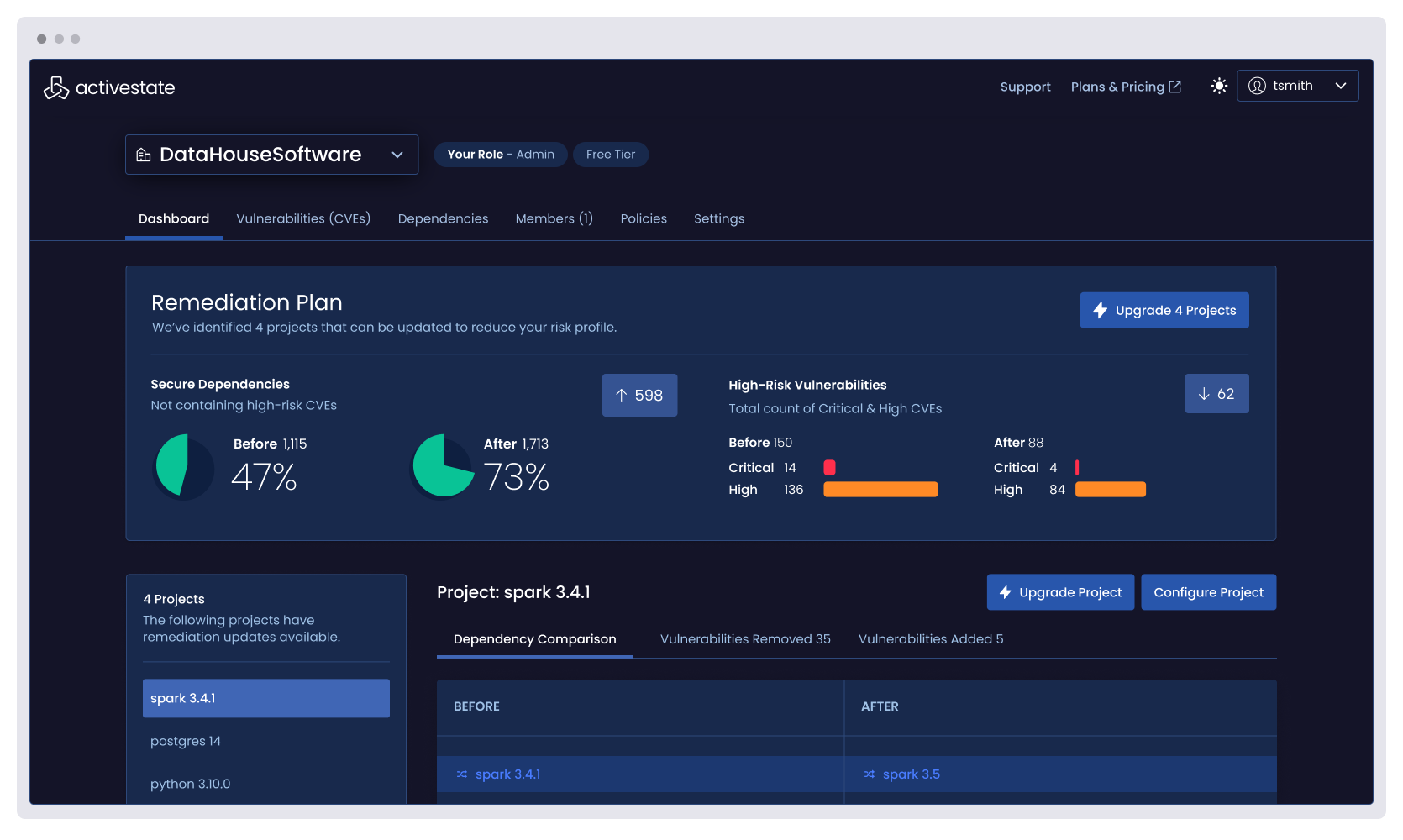

ActiveState helps you confidently integrate the latest security patches and feature updates while minimizing the risk of breaking changes, so you can evolve your applications without disrupting stability or security.

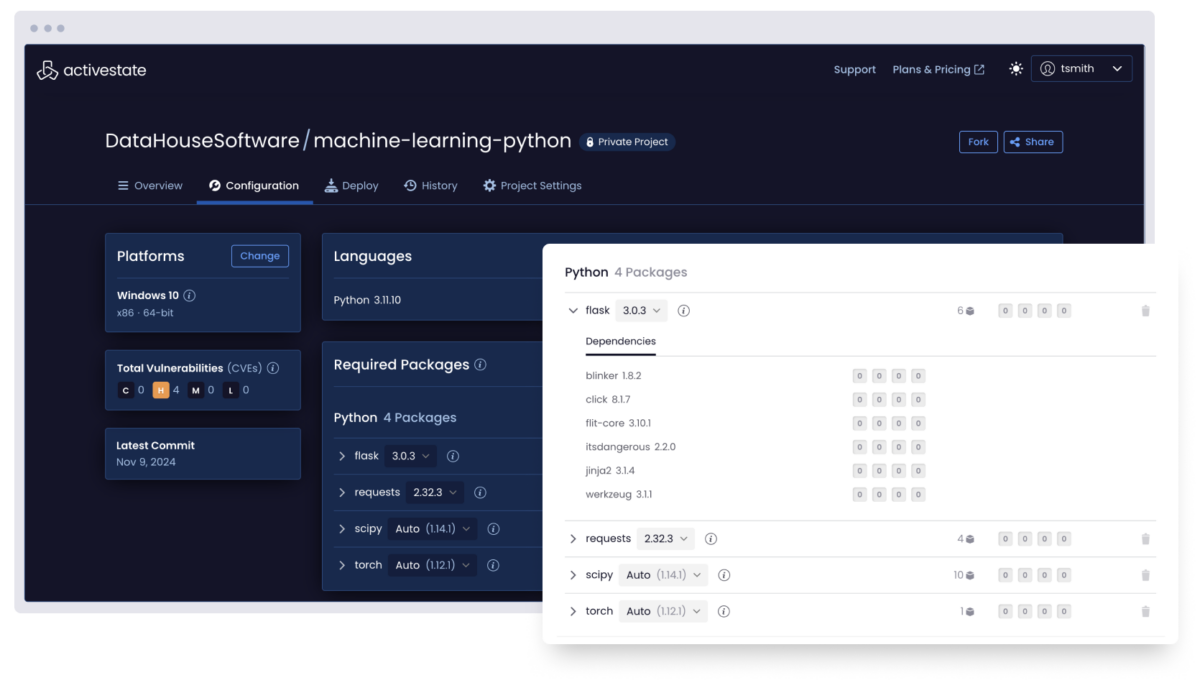

Resolving complex dependency chains and version conflicts wastes countless developer hours and delays critical security updates.

ActiveState automatically resolves these complex dependency relationships, transforming dependency hell into a straightforward path to secure, up-to-date applications.

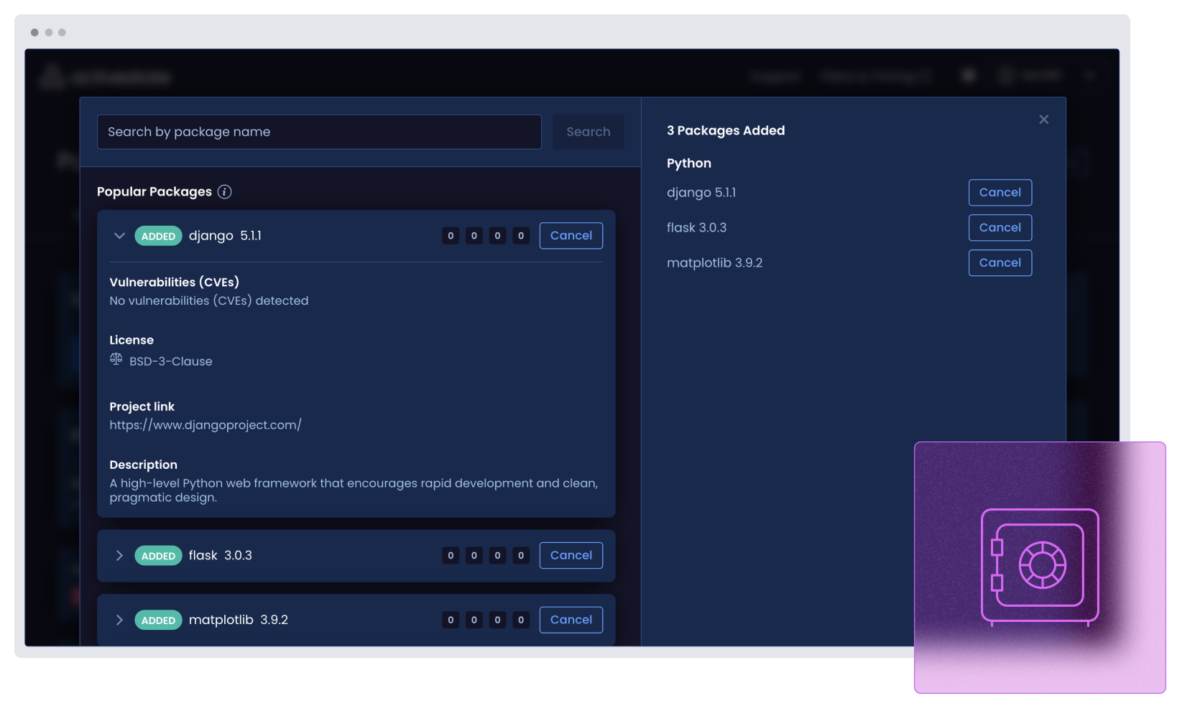

Open source packages disappear, get hijacked, or change unexpectedly, putting your builds at risk.

ActiveState maintains an immutable catalog of trusted open source, preserving version history, and protecting against typosquatting attacks, so you can confidently reproduce builds and integrate components from a curated, secure source.

The ActiveState difference

ActiveState’s platform transforms how you handle open source updates. By automating and simplifying the integration process, it dramatically reduces risk and overhead. Your team can focus on innovation, not maintenance headaches.

With ActiveState, staying current and secure becomes a smooth, continuous process rather than a series of disruptive, resource-intensive events.