Luckily, there are tools that simplify and automate many of the most painful processes of data preparation. In this post, I will discuss and demonstrate some key functionalities of a few of the solutions in use today.

Snorkel: Automated Training Data Preparation

The Snorkel project, which began at Stanford in 2016, is a Python project for creating, modeling, and managing training data. One of the biggest bottlenecks in preparing training data for use in Machine Learning projects is the process of managing and labeling datasets. The Snorkel library is an extremely useful solution for automating this process with minimal manual intervention.

Getting started with Snorkel is a relatively painless process:

- Install Python on your machine

- Use pip to install Snorkel: pip install snorkel

Data labeling is used to provide additional context to the data so that it can be properly manipulated and utilized later in a data analysis pipeline. Let’s take a look at a sample Python script to see how data labeling works using Snorkel.

Labeling Data with Snorkel

In this particular example, we will load a CSV file that contains the following columns:

- id

- text

You can check out the full dataset (25 records) in my sample repository on GitHub.

Many of these records contain text related to sports. The goal of our Python script will be to leverage the Snorkel library in order to label the records based upon which sport is being discussed in the text. For the sake of this example, we’ll do some very basic labeling to determine whether football or baseball is being discussed.

First, we’ll import the necessary modules for this example and define our constants:

import pandas as pd from snorkel.labeling import labeling_function from snorkel.labeling import PandasLFApplier SKIP=0 FOOTBALL=1 BASEBALL=2

Next, we’ll need to write three separate functions:

- The first function will leverage pandas to import the data from the CSV into a dataframe

- The other two will be Snorkel labeling functions that will look for occurrences of the words “bat” and “touchdown”

- If there is an occurrence of “bat,” we will label the record with the BASEBALL constant

- If there is an occurrence of “touchdown,” we will label it with the FOOTBALL constant

- If neither the bat or touchdown key words appear, we will label the record with the SKIP constant

def import_data():

data_df = pd.read_csv("data/data.csv",encoding='iso-8859–1')

return data_df

@labeling_function()

def bat(x):

return BASEBALL if "bat" in x.text.lower() else SKIP

@labeling_function()

def touchdown(x):

return FOOTBALL if "touchdown" in x.text.lower() else SKIP

Finally, we will import the data, apply the labeling functions to the dataframe, and print out the percent coverage for both functions.

sample_df = import_data()

lfs = [bat, touchdown]

applier = PandasLFApplier(lfs=lfs)

train = applier.apply(df=sample_df)

coverage_bat, coverage_touchdown = (train != SKIP).mean(axis=0)

print(f"baseball: {coverage_bat * 100:.1f}%")

print(f"football: {coverage_touchdown * 100:.1f}%")

Here is the output:

baseball: 12.0% football: 20.0%

In other words, out of 25 records, 3 contain the word “bat” and 5 contain the word “touchdown.”

Snorkel has several distinct advantages over other automation tools, including:

- It is well-documented, which makes it quick and easy to get started writing useful code

- It offers a wide variety of tutorials that can help any developer with at least some Python experience to quickly implement Snorkel in their AI/ML project.

OpenRefine: Automated Data Manipulation

OpenRefine (formally Google Refine) is an open source tool designed for data exploration, cleaning, transforming, and reconciliation. OpenRefine provides users with a platform for taking messy data and massaging it into a format free from show-stopping inconsistencies and formatting issues.

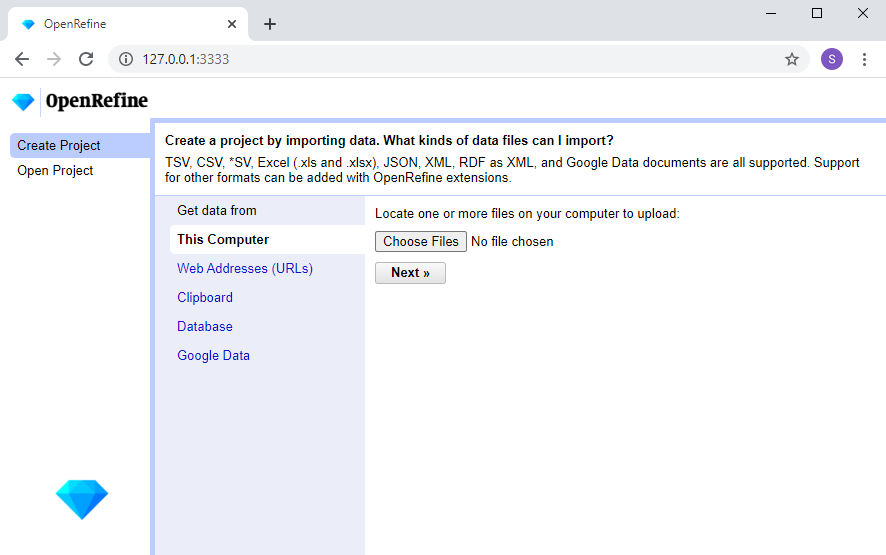

To get started with OpenRefine:

- Download the latest version from the releases section of the OpenRefine GitHub repository.

- Extract the zipped folder to the desired location on your machine.

- Navigate to the directory where you unzipped the folder and run openrefine.exe.

- Point your browser at http://127.0.0.1:3333/ in order to access your OpenRefine instance

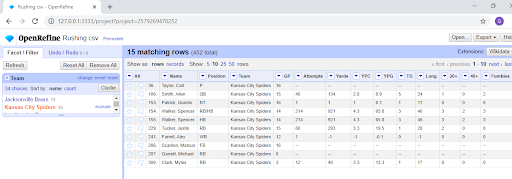

You can work directly within the OpenRefine UI by selecting “Create Project” in the left nav and choose a data file to import, such as the Football Rushing CSV file shown here:

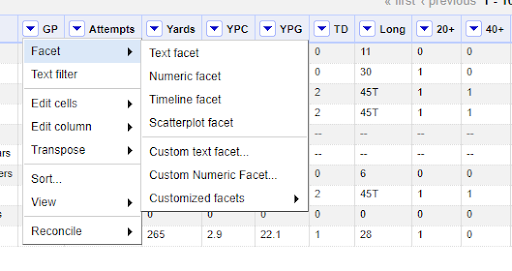

After importing the file, the data can be manipulated via Facets to help glean insights.

For example, we may want to visualize our data by applying a Facet to the team category that will allow us to check out the rushing statistics for all members on a particular team. Facets also allows you to edit a specific subset of data in bulk, which provides a mechanism for eliminating erroneous records, or altering those with insufficient or incorrect information in a particular field.

OpenRefine is a tool that can be useful to teams for a variety of reasons, including:

- Provides a user-friendly interface that simplifies the process of familiarizing yourself with the tool and its functionality.

- Offers a large and active community that not only provides excellent community support, but also ensures the product will continue to grow and improve as time goes on.

- Can be integrated into a data analysis pipeline using Python scripts that leverage third-party libraries like openrefine-client, which in turn leverage the OpenRefine API.

Summary

Over the course of the last decade, most organizations have adopted a big data strategy that has seen them increase their data storage capacity and implement tool sets to streamline data collection. But the more data that’s collected, the more acute the data cleansing problem becomes since no data is free of inconsistencies, redundancies, and inaccuracies when it’s first collected. This situation makes efficient data cleansing practices of paramount importance if organizations are going to be able to utilize their data in a timely manner, without undue investment in both time and resources.

Tools like Snorkel, OpenRefine (and openrefine-client), along with Python packages like Pandas are the tip of the iceberg when it comes to automating the cleaning, refining, and classifying of data. The primary need for clean and labeled data is for use in Machine Learning projects, which are increasingly the key to providing business insights across virtually all industries.

For those that prefer to clean data via a user-friendly UI, OpenRefine is a good choice. However, experienced Python developers will likely prefer Snorkel for its ability to programmatically label and manage data for Machine Learning applications.

Why use ActivePython for Data Science and Machine Learning

ActivePython is built for your data science and development teams to move fast and deliver great products to the standards of today’s top enterprises. Pre-bundled with the most important packages Data Scientists need, ActivePython is pre-compiled so you and your team don’t have to waste time configuring the open source distribution. You can focus on what’s important–spending more time building algorithms and predictive models against your big data sources, and less time on system configuration.

Some Popular Python Packages You Get Pre-compiled – with ActivePython

Python for Data Science/Big Data/Machine Learning

- pandas (data analysis)

- NumPy (multi-dimensional arrays)

- SciPy (algorithms to use with numpy)

- HDF5 (store & manipulate data)

- Matplotlib (data visualization)

- Jupyter (research collaboration)

- PyTables (managing HDF5 datasets)

- HDFS (C/C++ wrapper for Hadoop)

- pymongo (MongoDB driver)

- SQLAlchemy (Python SQL Toolkit)

- redis (Redis access libraries)

- pyMySQL (MySQL connector)

- scikit-learn (machine learning)

- TensorFlow (deep learning with neural networks)*

- scikit-learn (machine learning algorithms)

- keras (high-level neural networks API)

Contact us to learn more about using ActivePython in your organization.