Blog

All Blog Posts

Intelligent SBOM Ingestion and Breaking Change Analysis

Another Slack alert. “Critical vulnerability detected: CVE in package X.” You pull up the advisory…and unfortunately, it’s legit: publicly known and actively exploited. You check ...

Read More

From Vulnerable to Unbreakable: The Evolution of Container Security in Modern Software Development

In an era where software powers everything from mobile banking to space exploration, container security has emerged as a critical frontier in protecting our digital ...

Read More

Kubernetes Runtime Security: How to Detect and Respond to Live Threats in Your Container

As container usage becomes standard across development teams, Kubernetes stands out as the default orchestration platform. But while enterprise engineering teams have made major progress ...

Read More

Introducing ActiveState’s Secure, Custom Container Images

Over several decades, ActiveState has addressed a critical challenge in software development: securing the open-source landscape. However, this landscape is changing rapidly, and the way ...

Read More

ActiveState Drives the Future of Security with Intelligent Remediation at Gartner Security & Risk Management Summit

Last week, ActiveState participated in the Gartner Security & Risk Management Summit in National Harbor, Maryland, an event that consistently brings together the brightest minds ...

Read More

10 Container Security Best Practices Every Engineering Team Should Know

Containers have become the gold standard for building modern, cloud-based software. It’s no surprise – using containers for app development has plenty of benefits, such ...

Read More

ActiveState Recognized Among Top Global Innovators in ComponentSource 2025 Awards

We are thrilled to announce that ActiveState has been recognized by ComponentSource in their prestigious 2025 Awards, celebrating our standing as a bestselling brand and ...

Read More

What Is Vulnerability Prioritization? A Guide for Enterprise Cybersecurity Teams

Vulnerability prioritization is far from simple. Yet, many DevSecOps teams are manually evaluating which vulnerabilities to remediate based on severity alone. Only considering the severity ...

Read More

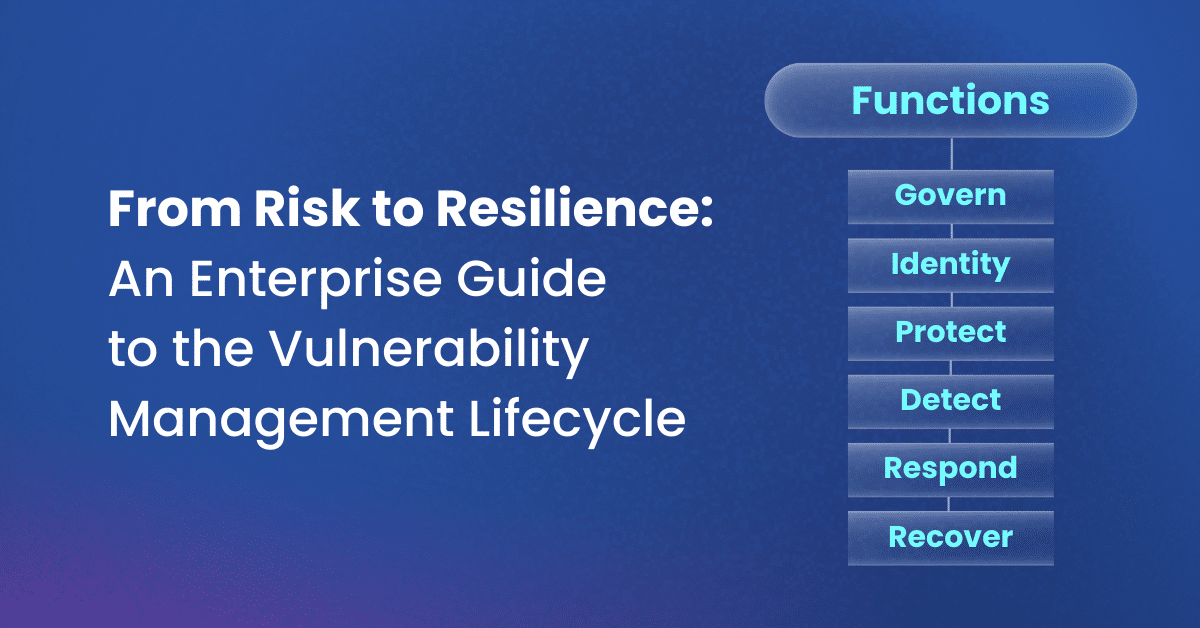

From Risk to Resilience: An Enterprise Guide to the Vulnerability Management Lifecycle

Vulnerability management shouldn’t be treated as a ‘set it and forget it’ type of effort. The landscape of cybersecurity threats is ever-evolving. To face the ...

Read More

Learnings & Top Security Trends from ActiveState at RSA 2025

RSAC 2025, held at the Moscone Center in San Francisco from April 28th to May 1st, brought together industry leaders under the central theme of ...

Read More

AI-Powered Vulnerability Management: The Key to Proactive Enterprise Threat Detection

AI is no longer a novelty. Nearly all of the tools modern enterprise software teams rely on, from project management and code intelligence to security ...

Read More

Navigating the Open Source Landscape: How Financial Institutions are Bridging the Vulnerability Management Gap with ActiveState

The financial services sector operates under a unique confluence of stringent regulatory demands, the need to safeguard vast amounts of sensitive data, and the imperative ...

Read More