ActiveState Tcl

Why ActiveState Tcl?

ActiveState Tcl is 100% compatible with community Tcl but is automatically built from vetted source code in order to ensure its security and integrity. Secure your Tcl software supply chain.

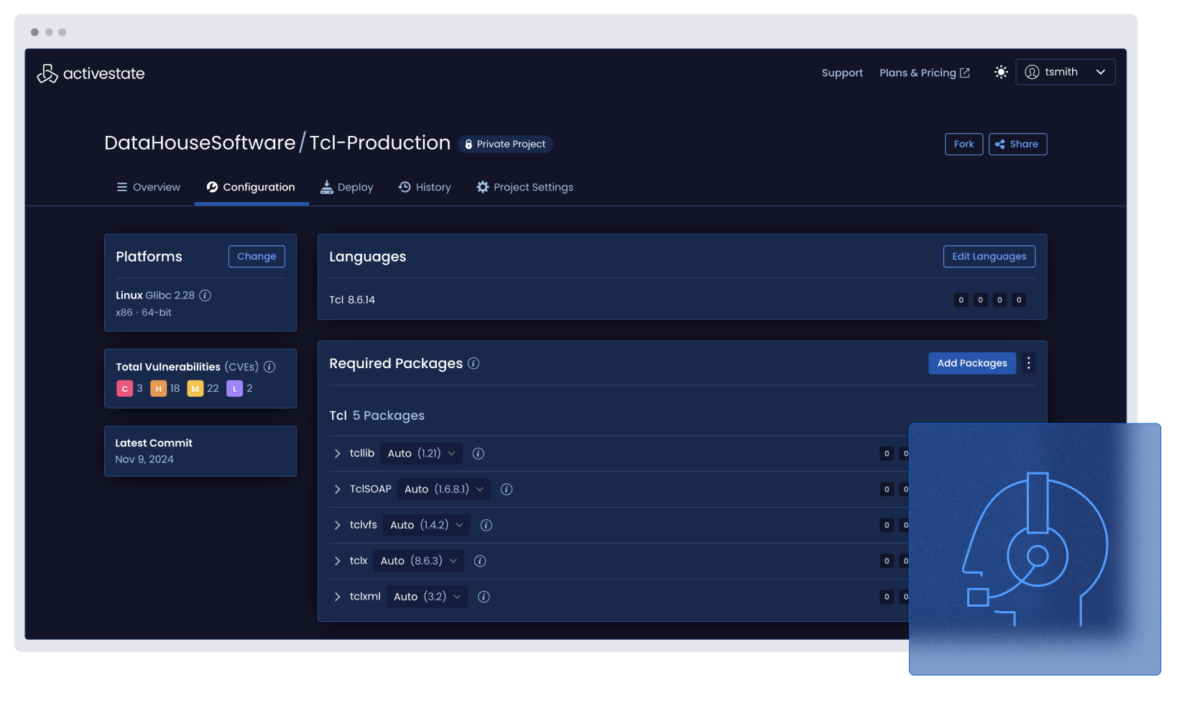

All stakeholders from security, compliance, and IT to developers, QA, and DevOps can centrally collaborate in order to facilitate curation, compliance, and consistency via policy for all Tcl projects across the organization. Eliminate the risks inherent in managing Tcl on a per project basis

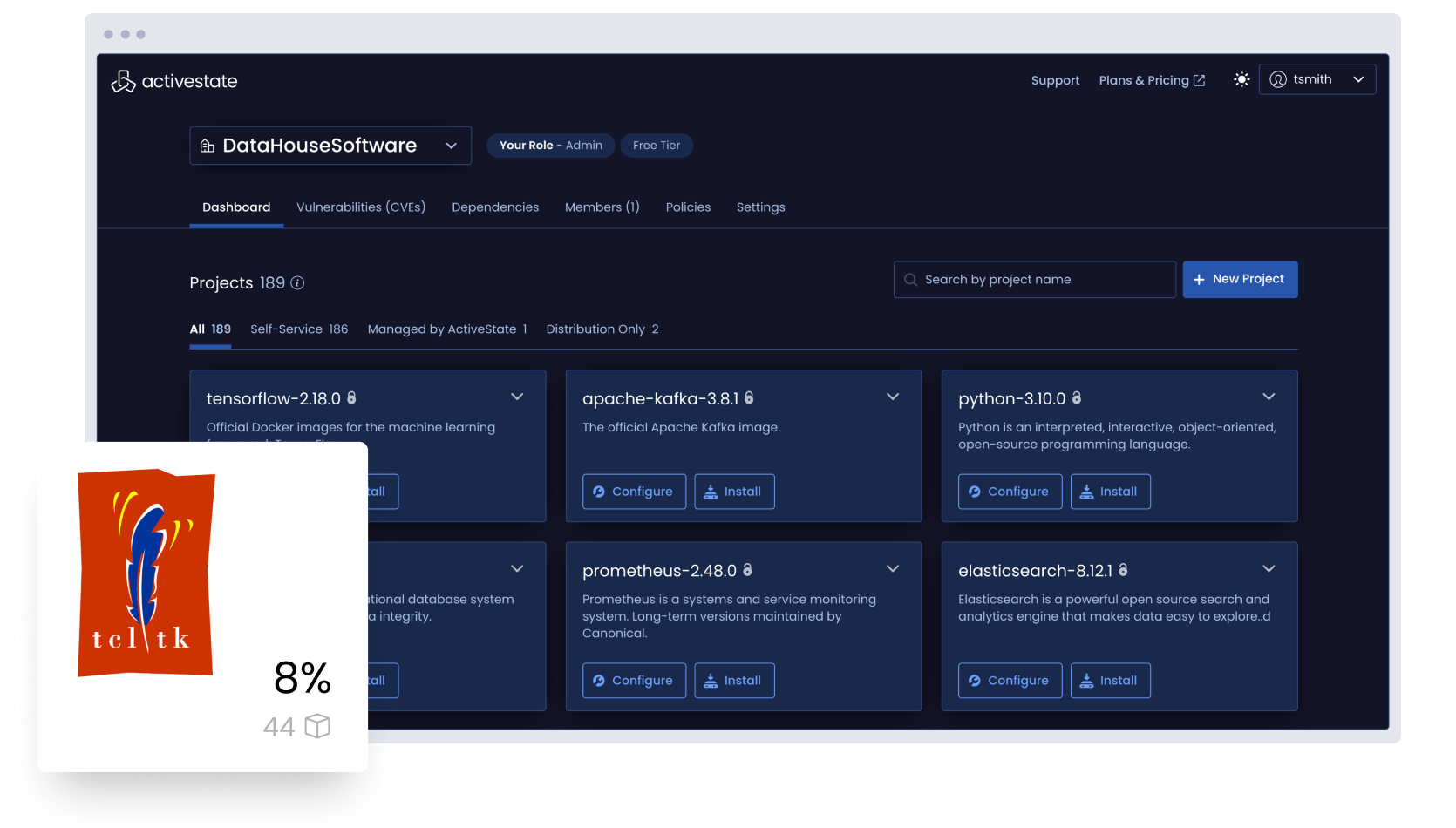

Manage all the Tcl in your organization

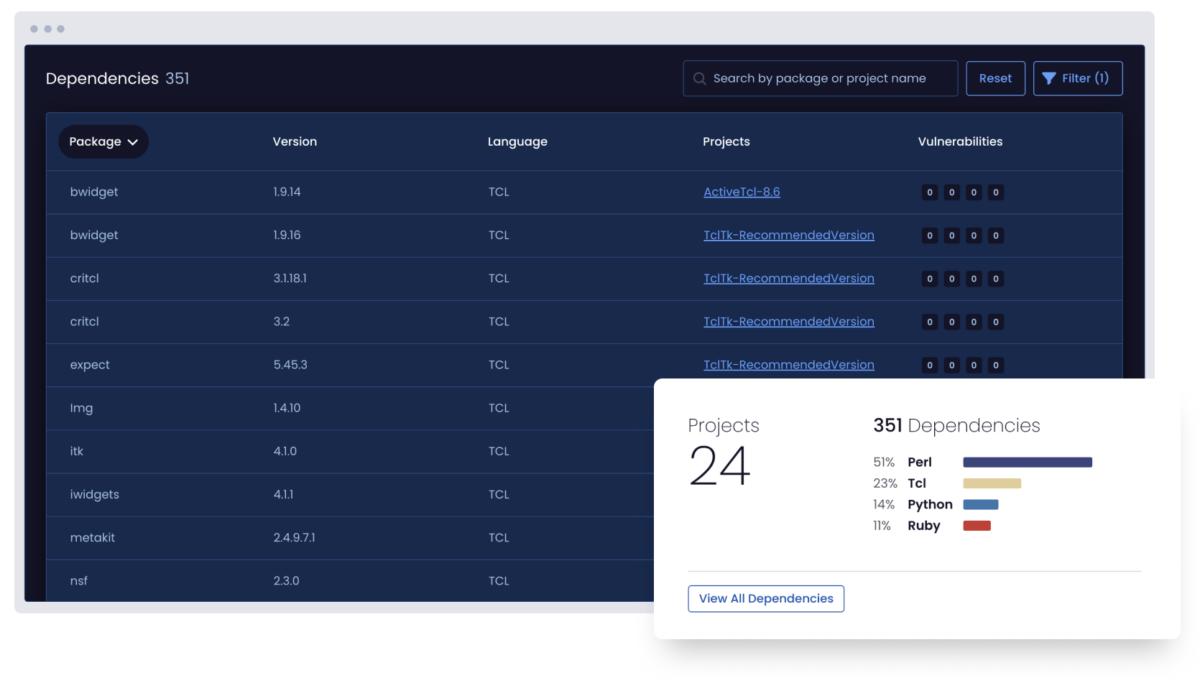

Visualize all the Tcl deployed across your organization by top-level, transitive, and shared dependencies whether or not you use ActiveState Tcl.

But for those looking to curate a catalog of securely built Tcl artifacts, ActiveState Tcl provides the ability to automatically build and centrally manage/deploy Tcl runtimes in order to ensure consistency between environments for everyone from dev to test to CI/CD and production.

Analyze all the Tcl deployed across your organization by license, vulnerability status, and more, whether or not you use ActiveState Tcl.

Centrally collaborate with security, compliance, and IT personnel in order to implement a governance policy that can be applied to all Tcl deployments. Flag policy violations, notify stakeholders, approve exceptions, and create an audit trail in order to ensure security and compliance with IT rules, industry guidelines, and government legislation.

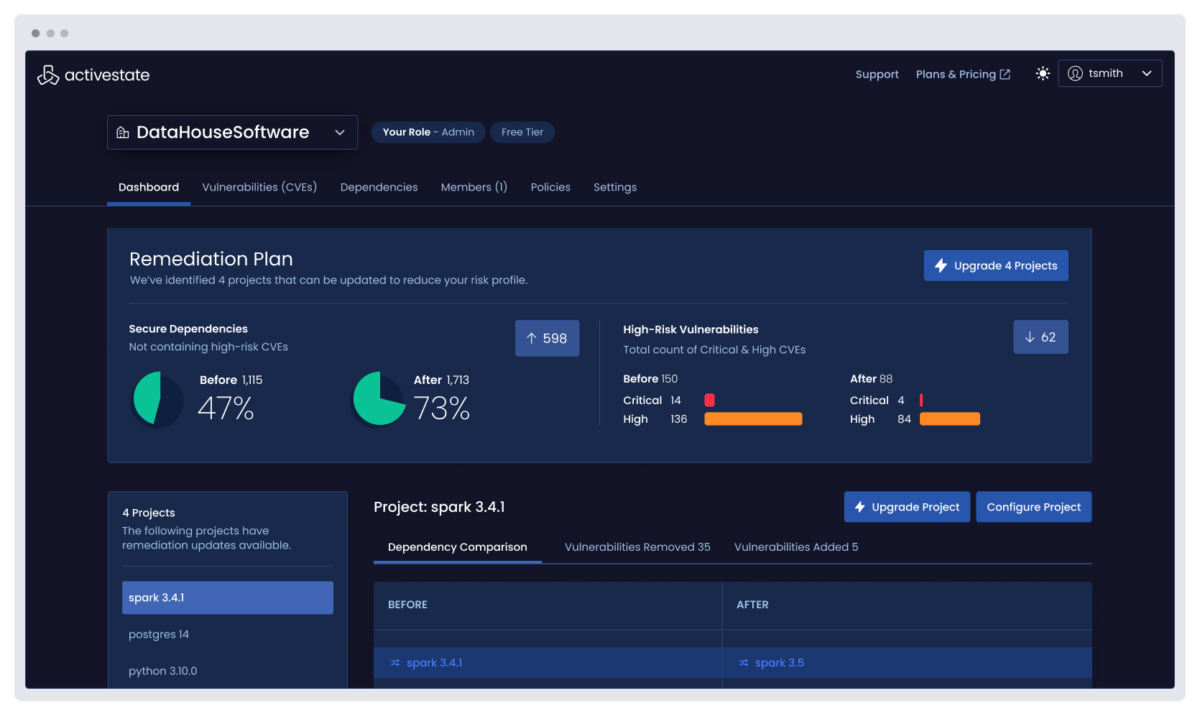

Remediate Tcl vulnerabilities faster by centrally identifying all the vulnerabilities in every project across your enterprise, eliminating the threat of unidentified vulnerabilities and prioritizing remediation by understanding their impact.

Choosing to work with ActiveState Tcl means you can also automatically rebuild runtime environments with fixed versions of vulnerable dependencies, reducing Mean Time To Remediation (MTTR).

Support the Tcl you depend on for your commercial applications, even beyond EOL.

ActiveState provides long-term support for Tcl deployments, ensuring you can continue to benefit from business-critical applications even after community support is no longer available. Let ActiveState backport security fixes so you can free up your team to focus on innovation.

Learn more about the benefits of working with ActiveState Tcl

ActiveState Python and Tcl Case Study

Prior to purchasing ActiveState, one of the key issues Mentor was languishing under was their corporate governance of open source licenses.

The Frontline of Attack – Securing Your Python, Perl and Tcl Supply Chains

Learn how attacks on open source supply chains impact your organization, and how you can secure your Python, Perl and Tcl environments accordingly.

Solving Tech Debt With Tcl

Explore the benefits to using Tcl to solve your tech debt plus gain a practical example of wrapping an existing API with Tcl to quickly and easily solve your tech debt.