- Answering a wide range of general questions

- Suggesting solutions to a wide range of problems

- Having wide ranging conversations on a variety of disciplines

One of the fields of discipline that users have discovered ChatGPT excels in is generating programming code. While there has been quite a lot of speculation that ChatGPT will replace programmers or even change the way we think, this blog is focused on the tool’s actual capabilities today rather than worrying about possibilities and expectations in the future.

In this post, I will explain ChatGPT’s code generation capabilities and compare it to industry standards by asserting key algorithmic and semantic outcomes. The criteria I will be evaluating ChatGPT on include:

- Ability to generate code that is correct

- Ability to produce effective code (ie., clear, concise code rather than spaghetti code)

- Ability to create maintainable code (ie., code that is understandable by humans who will need to maintain it)

- Ability to generate code that is free of vulnerabilities/security concerns.

The main aim of this post is to clarify some of the benefits and caveats of using ChatGPT in the real world. TL;DR? You get what you pay for.

ChatGPT Code Generation Examples

Let’s take a look at a few, straightforward Python code examples where ChatGPT might either give you a reasonable answer, or else backfire due to inaccuracies.

Generate a Python Function with ChatGPT

For this example, I asked ChatGPT to “write me a Python function that trims a specified character from a string.”

The answer I get back is plain and simple:

def trim_char(string, char): """ This function takes a string and a character and removes all instances of that character from the beginning and end of the string. """ return string.strip(char)

It also gives me an example:

my_string = "###Hello World###" char_to_trim = "#" trimmed_string = trim_char(my_string, char_to_trim) print(trimmed_string)

Okay, that’s pretty straightforward, which is what we should expect given that I asked something that’s not only trivial to do, but is also available in the Python Standard Library. Let’s try something a little more advanced.

Generate an Image with ChatGPT Using Python Image Library (PIL)

Now I’ll ask ChatGPT to “write a Python function that generates an 800 X 600 pixel image of the Yin-Yang symbol using a Python Image Library PIL.”

The answer is:

from PIL import Image, ImageDraw

def generate_yin_yang_image():

# Create a new image with a white background

image = Image.new("RGB", (800, 600), "white")

draw = ImageDraw.Draw(image)

# Draw the black half circle

draw.pieslice((0, 0, 800, 600), 0, 180, fill="black")

# Draw the white half circle

draw.pieslice((0, 0, 800, 600), 180, 360, fill="white")

# Draw the black and white circles

draw.ellipse((200, 200, 600, 600), fill="white", outline="black")

draw.ellipse((200, 0, 600, 400), fill="black", outline="black")

# Save and show the image

image.save("yin-yang.png")

image.show()

That looks great on paper, but is it correct? Let’s try it out:

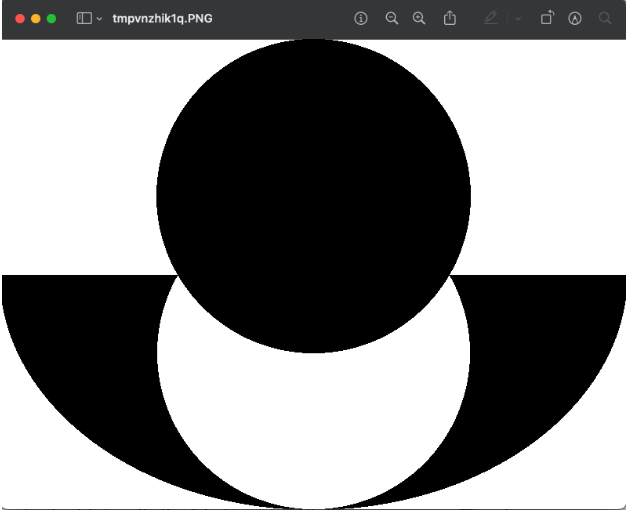

Figure 1: The Yin-Yang Symbol According to ChatGPT

The result seems to imply that ChatGPT hasn’t been trained to recognize images, so I wouldn’t trust it to undertake programming tasks that have a connection to the visual world. The lesson here is to be very careful when asking it to write code representing entities like graphics, including (presumably) charts and graphs.

Generate a GraphQL Name/Path with ChatGPT using Graphene

Let’s ask it to “write code to find a GraphQL resolver name/path without executing the resolver for the query in Python Graphene.”

ChatGPT’s answer is:

from graphene import ObjectType, String, Schema

class Query(ObjectType):

hello = String(name=String(default_value="world"))

def resolve_hello(self, info, name):

return f"Hello {name}!"

schema = Schema(query=Query)

# Get the resolver path for the 'hello' field

field_name = 'hello'

field = schema.get_query_type().fields[field_name]

resolver_path = field.resolver_info.path

print(f"The resolver path for '{field_name}' is: {resolver_path}")

Unfortunately, running this code does not work:

Traceback (most recent call last): File "/Users/theo.despoudis/Workspace/wpe-merge/sink/tempCodeRunnerFile.python", line 1, in <module> field_name NameError: name 'field_name' is not defined

GPT’s implementation of the library has two errors:

- The “Schema” is not the actual GraphQL schema that we want to find the query resolver. We should be using the “graphql_schema” field that contains the schema.

- There is no “get_query_type” method.

The actual code should have been:

def get_resolver_field(schema, name): return schema.graphql_schema.query_type.fields[name]

This is one of the weaknesses of ChatGPT’s current model, since it can only reference information it was trained on. This means that code/libraries/features created after 2021 won’t be known to it. Until ChatGPT starts training in realtime, it simply won’t be able to keep up since software is an extremely fast moving domain. As such, the code that ChatGPT generated may have been correct at one point in time, but it isn’t correct now.

You could ask for specific versions of the codebase, but that would just extend the problem further. And to be honest, this is not the most elegant way of retrieving the information, as it involves looking into the implementation of the library.

Maybe we need an example that’s still realistic but has a potential solution that doesn’t rely on intricate knowledge.

Generate a Group by function with ChatGPT

Now let’s ask ChatGPT to “write a function in Python that aggregates a list of metric values grouped by their storage_type label.” It gives us the a list of metrics in the following format:

metrics = [

{

created_at: '2023-3-3T00:00:00.000Z',

value: 10000000000,

labels: {

environment: 'production',

storage_type: 'files',

},

},

{

created_at: '2023-3-4T00:00:00.000Z',

value: 50000000000,

labels: {

environment: 'development',

storage_type: 'database',

},

},

{

created_at: '2023-3-4T00:00:00.000Z',

value: 10000000000,

labels: {

environment: 'staging',

storage_type: 'database',

},

}

]

As you can see, there’s a list of objects with the following fields:

- created_at: The date on which the metric was created.

- value: The value of the metric. This represents the size (in bytes).

- labels: An object that contains metric metadata. It has the following properties:

- environment: The environment in which the metric exists. It can have one of three possible values: production, staging, or development.

- storage_type: The type of storage that this metric value represents. It can have one of two possible values: database or files.

The function should transform this list of objects into another object that aggregates the totals of each “storage_type” with the following format:

[

{

environmentTotal: {

development: 50000000000,

production: 0,

staging: 10000000000,

},

storage_type: 'database',

total: 60000000000,

},

{

environmentTotal: {

development: 0,

production: 10000000000,

staging: 0,

},

storage_type: 'files',

total: 10000000000,

}

]

Since there are two possible values for the “storage_type” there will be a total of two items. The fields should be:

- storage_type: The type of storage that this aggregate value represents.

- total: The total aggregate of values for each environment.

- environmentTotal: An object that contains aggregated totals for each environment type. It has the following subfields:

- development: The total metric value for the development environment of that particular “storage_type.”

- production: The total metric value for the production environment of that particular “storage_type.”

- staging: The total metric value for the staging environment of that particular “storage_type.”

You can use the examples above as inputs and expected outputs. With this, ChatGPT will give a more satisfactory answer.

Here’s a Python function that aggregates the list of metrics by their storage_type label:

from collections import defaultdict

def aggregate_metrics_by_storage_type(metrics):

# Initialize dictionaries to store aggregated values

storage_type_totals = defaultdict(int)

environment_totals = defaultdict(lambda: defaultdict(int))

# Iterate through each metric

for metric in metrics:

# Extract the storage type and value of the metric

storage_type = metric["labels"]["storage_type"]

value = metric["value"]

# Increment the total for the storage type and environment

storage_type_totals[storage_type] += value

environment_totals[storage_type][metric["labels"].get("environment", "unknown")] += value

# Transform the aggregated values into the desired format

results = []

for storage_type, total in storage_type_totals.items():

environment_total = dict(environment_totals[storage_type])

results.append({

"storage_type": storage_type,

"total": total,

"environmentTotal": environment_total

})

return results

results = aggregate_metrics_by_storage_type(metrics)

print(results)

Running the example above will indeed produce the desired response, although it’s missing the zero values for the unused environments:

[{'storage_type': 'files', 'total': 10000000000, 'environmentTotal': {'production': 10000000000}}, {'storage_type': 'database', 'total': 60000000000, 'environmentTotal': {'development': 50000000000, 'staging': 10000000000}}]

So we can see that ChatGPT performs well when implementing pure functions that do not contain side effects like transforming input to output with little environmental interference.

ChatGPT Use Cases: The Best Programming Tasks for ChatGPT

To make the best use of ChatGPT, you can leverage its existing capabilities to provide code for the following use cases:

- Lazy typing boilerplate code: If you are lazy (as many programmers are), you can use IDE-assisted plugins like the VSCode extension to spin code right in your editor. Then, small tasks that are easy to undertake (like injecting boilerplate code or adding comments and documentation strings) can be done with less typing, thus saving time and effort.

- Automation: ChatGPT is very useful when it comes to automating boring and mechanical tasks, like summarizing pieces of code or generating templates.

- Asking for suggestions and ideas: Asking for ideas, verifying POCs, and troubleshooting are suitable tasks for ChatGPT – as long as you verify the results. For example, you could ask ChatGPT to check for bugs or performance improvements related to a particular piece of code, but you still have to verify that ChatGPT is correct and that its proposal actually works.

- Learning tool: ChatGPT can help you learn to understand and implement common algorithmic questions from LeetCode, HackerRank, and even online tutorials. Most of the time, it will produce clearly documented code with elaborate explanations. A human would likely omit these steps, as it takes time and effort to adjust the writing style to accommodate a comprehensive play-by-play description of the algorithmic steps.

Since ChatGPT is a fairly new tool, there are plenty of opportunities to evaluate its potential. As long as you understand its capabilities and shortcomings sufficiently, you can integrate ChatGPT functionality into your everyday workflows and UI interactions.

Conclusions – Programming with ChatGPT Pros and Cons

The code generation capabilities of the current version of ChatGPT (GPT 3.5) that OpenAI offers for free are quite good for a number of use cases. But that doesn’t mean you don’t need to check to see if the code works as expected. Ensure accuracy by writing unit tests, and never just take it for granted that everything will work.

As much as you can, improve your question so that it supplies more information to the tool. This generally results in much better code. However, some might see this as a two-edged sword, since you won’t be learning to understand the code from your own perspective, but rather relying on the tool to give you the answer.

If you can, try using v4 of ChatGPT, which is offered for a fee (Note: at the time of writing, OpenAI is currently at capacity and not accepting new registrations for ChatGPT v4). The latest version offers a number of improvements, including:

- Less likely to get confused; more likely to provide better code.

- Has a larger buffer, enabling you to interactively discuss much larger programs with it for longer before the buffer overruns and it loses context, forcing you to paste in your code again.

- Much faster response times.

As an experienced software engineer, I was a bit skeptical about ChatGPT’s actual potential. While it isn’t going to replace human programmers anytime soon, used correctly, it can make you much more productive, improving efficiency and eliminating errors. Just make sure you use ChatGPT only for learning purposes or to assist with menial tasks that do not require human intervention.

Next steps:

If you’d like to practice Python with ChatGPT, the best way is to download a free copy of ActiveState Python, which will be automatically installed into a virtual environment. This provides you with a sandbox for experimentation without impacting your existing projects/installations.

Read Similar Stories

Setting up Python projects in VS Code just got a lot easier with the ability to automatically switch between interpreters. Learn how.

Learn how to program robot perception, planning & control with Python, and download a free prebuilt robot programming runtime environment.

This article provides an introduction to the top ten errors beginners often make and gives you tips on how to avoid them.